Hi to everybody!

I'm analyzing an RNA-seq dataset (n=28; 1 factor, 4 variables: normal, SM, WS, WB) and I'm finding some unexpected results when comparing the three tests provided by the edgeR package: exact test, glmLRT, glmQLF.

Usually, as a preliminary exploratory analysis, I perform all the three tests mentioned above and the results are in agreement; the only thing that usually changes is the number of DEGs (Exact test>glmLRT>glmQLF). Assume for instance the following dataset: CTRL, conditionA and conditionB. If the condition A affects the gene expression more than the condition B, thus the output of the three tests will be consistent (all saying the condition A determines the most relevant effects).

With this new dataset, I did the exploratory analysis and the exact test and the glmLRT are in agreement (no, or small effects), while the glmQLF produces several DEGS in the conditions WS and WB. Below the results

DEGs with exact test

SM vs Normal: 4

WS vs Normal: 0

WB vs Normal: 0

DEGs with glmLRT

SM vs Normal: 6

WS vs Normal: 0

WB vs Normal: 0

DEGs with glmQLF

SM vs Normal: 0

WS vs Normal: 143

WB vs Normal: 125

Why this result is so divergent??? Which test is reliable? Suggestions are welcome!!

Below I copy the "simplified" code.

treat <-factor (targets$myopathies, levels =c("Normal","WS","WB","SM"))

myDGEList <- DGEList(counts= myCounts, group=treat)

design <- model.matrix(~0+treat)

keep<-filterByExpr(myDGEList, group=treat)

filter<- myDGEList[keep, , keep.lib.sizes=FALSE]

myDGEList.filtered.norm <- normLibSizes(filter, method="TMM")

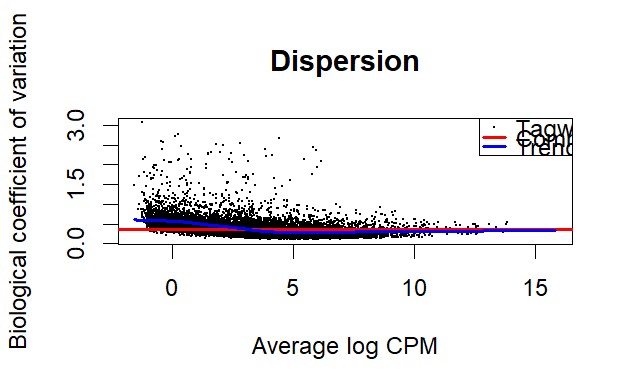

disp <- estimateDisp(myDGEList.filtered.norm, design, robust=TRUE)

### common disp: 0.128

## exact test

exact.SM <- exactTest(disp, pair=c("Normal","SM"))

## LRT

fit_lrt<- glmFit(disp, design, robust=TRUE)

LRTtest.SM<- glmLRT(fit_lrt, contrast=my.contrasts [, "d42_SM"])

## QLF

fit_qlf<- glmQLFit(disp, design, robust=TRUE)

QLFtest.SM<- glmQLFTest(fit_qlf, contrast=my.contrasts [, "d42_SM"])

Thank you for your time and attention!

Marianna

Which version of edgeR are you using? Please see the FAQ or the posting guide.

The code you show is incomplete because it does't explain the contrast that is being tested. You don't seem to be undertaking the same comparison for glmLRT and glmQLFTest as for exactTest. You could compare

SMtoNormal, same as for exactTest, by settingcontrast = c(-1,0,0,1). As it is, the contrast refers to a groupd42that is not mentioned elsewhere in your question.You're setting

robust=TRUEfor glmFit, but glmFit doesn't have arobustargument.Thank you Gordon Smyth for your reply!

I'm using edgeR v. 4.0.1.

I've set the same comparisons in the three tests. Contrasts are set as follows:

Sorry, I've just deleted some sections of the code to avoid a long post with unnecessary details.

glmFit doesn't have a robust argument, you're right. It has been a "copy and paste" mistake as I used it in the glmQLFit.