Hi,

I'd like to find stably expressed genes in RNA-seq projects.

I assumed that for each project I can compute between-sample (not necessarily between-genes) comparable expression values and judge stable expressin by coefficient of variation.

From what I was read I understand FPKM is not ok here and I could use CPM or TPM or DESeq2 median of ratios as stated at hbctraining.

As I've used DESeq2 previously the last option seems optimal for me. Also as I've read TPM calculation from counts is only approximation as stated by Michael Love and I don't have fastq files anymore (due to disk space constrains) so I can't use Salmon or similar program to calculate TPM. Theoretically I could download fastq files again and process them but I use many projects so I think it is not time-efficient now.

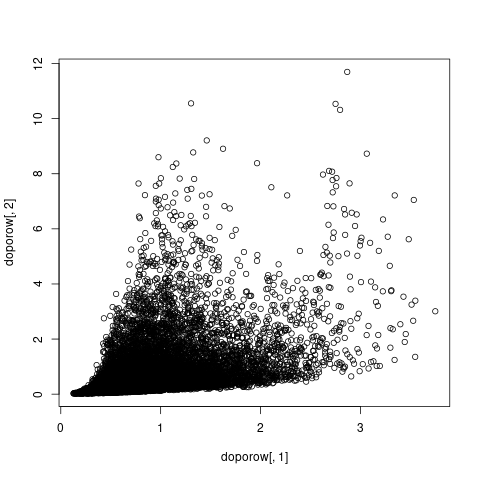

So can I normalize counts with counts(dds, normalized=T) (to get 'median of ratios' normalized values) and compute coefficient of variation of these values to get ranking of non-DE genes?

Alternatively, could I use CPM values obtained from edgeR for example?

They I'd use the 'lessAbs' test from DESeq2, see vignette, which can identify non-DE genes by significance.

But they still exist somewhere, no? Otherwise it will be hard to publish.

The normalization methods of DESeq2 and edgeR perform very similar, it really does not matter. Use either of the two, but not a method like RPKM or TPM that only corrects for library size, but not composition.

Thank you for input. In fact I've tried

lessAbsinDESeq2but even forlfcThreshold=.5it haven't detected any significant non-DE genes. So far I've tried it with project containing only two reps per condition, so maybe for other projects with more replication it would be okay.Thank you, so I'll try with size factor normalization from

DESeq2.The test is afaik and by experience quite conservative, so maybe be a bit more lenient, either for lfcThreshold (for example in one project I used FC=1.75 and padj 0.1). Test all possible combinations of your groups, and then intersect. Use what is common. Something like that. Depending on what your goal is this might just be "good enough".

Thanks for suggestion. In each project I have two factors genotype and treatment. I'd like to find stable expressed genes in each projects. So I neglected genotype and tested treated vs control.

FC=1.75 and padj 0.1 don't sound good for me so I'll try to use CV of normalized counts.

Michael Love what are your thoughts about it?